28 March 2017

P-sol in a conglomerate countertop slab

Over the weekend, I saw this polished slab of countertop outside a hardware store in Berryville, Virginia. I stopped to check it out. What a beautiful conglomerate!

I noticed that many of the cobbles had pronounced weathering rinds:

Here’s another example:

Note the weird shapes of the grains around it – these embayed and flush grain boundaries are intriguing (yellow highlights):

These grain boundaries are indications that pressure solution has taken place in this rock.

They suggest this conglomerate was squeezed a bit, triggering pressure solution of certain minerals. Rocks rich in those minerals (probably quartz in this case) deformed with their most-highly-pressurized portions dissolving away (changing the shape of the cobble) as less soluble rocks pushed into them.

Explore the whole thing here:

Link 0.85 Gpx handheld GigaPan by Callan Bentley

27 March 2017

Cleaved, boudinaged, folded Edinburg Formation southwest of Lexington

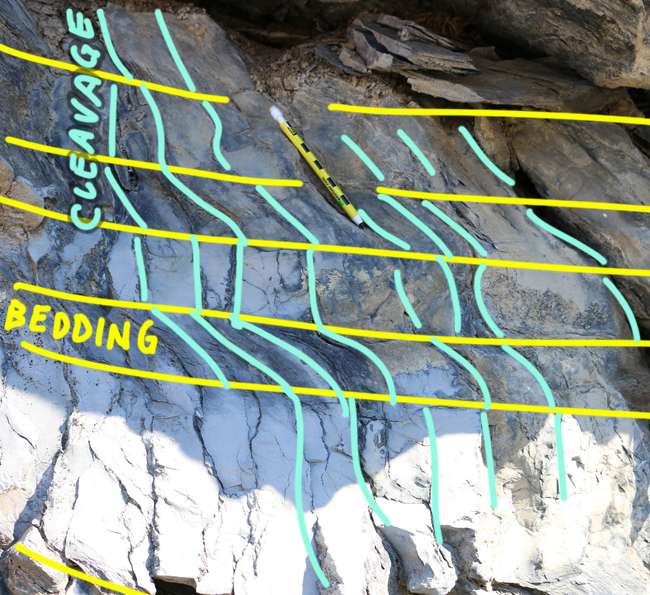

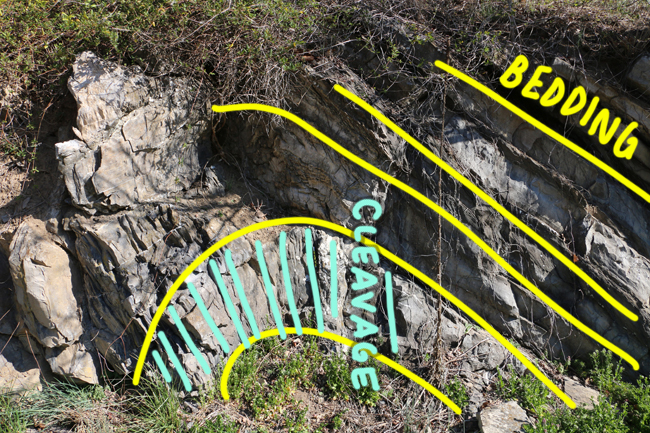

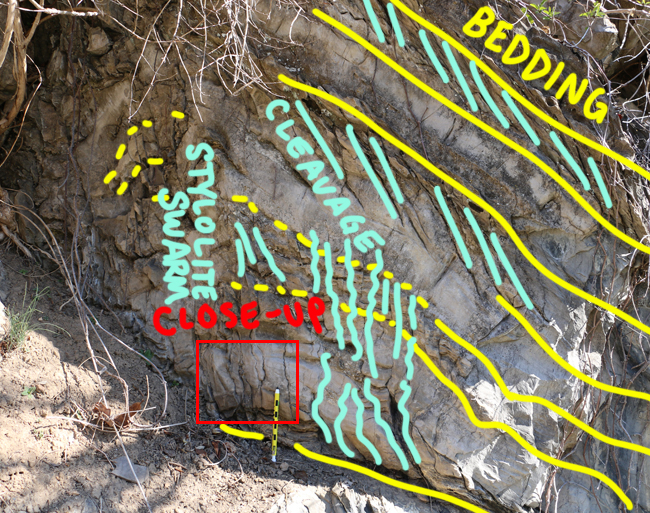

Examine these two photos, and ask yourself: what am I seeing here?

Got your answers?

Let’s take a closer look, one image at a time:

And now the second one:

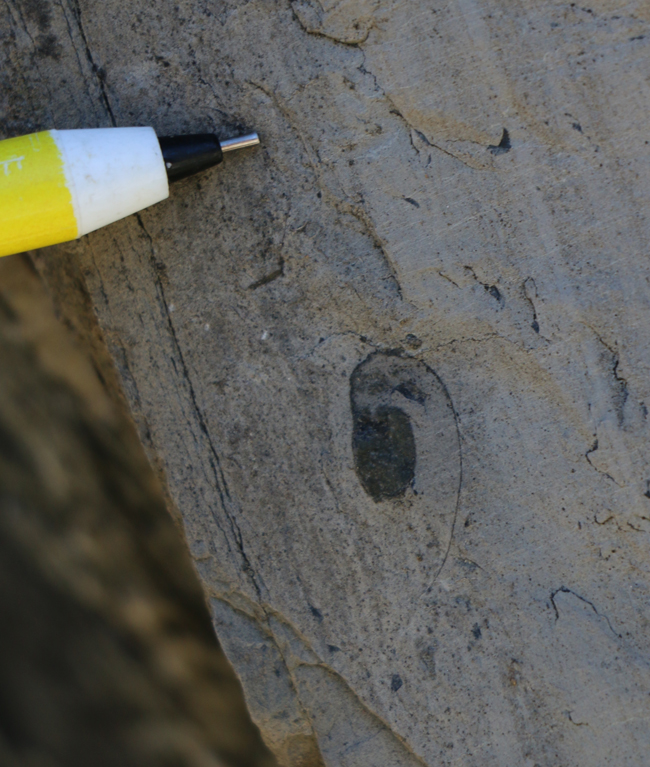

These are limestones and shales of the Edinburg Formation, an Ordovician-aged unit in Virginia’s Valley & Ridge Province that records the transition from passive margin sedimentation (a clean carbonate bank that prevailed over much of North America since the Cambrian) into the clastic signal deriving from the late Ordovician Taconian Orogeny. The limestone layers are fairly massive, and even include nice details like this fossil:

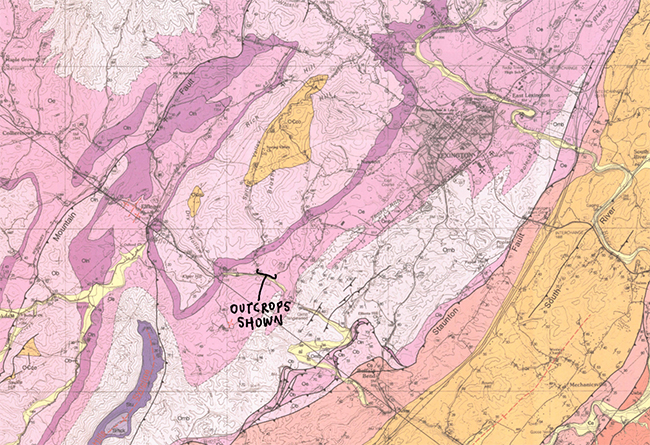

I visited these outcrops last week on the advice of my colleague Jeff Rahl of Washington & Lee University in nearby Lexington. Here’s a detail of the site from the Geology of Rockbridge County (Wilkes, et al., 2007):

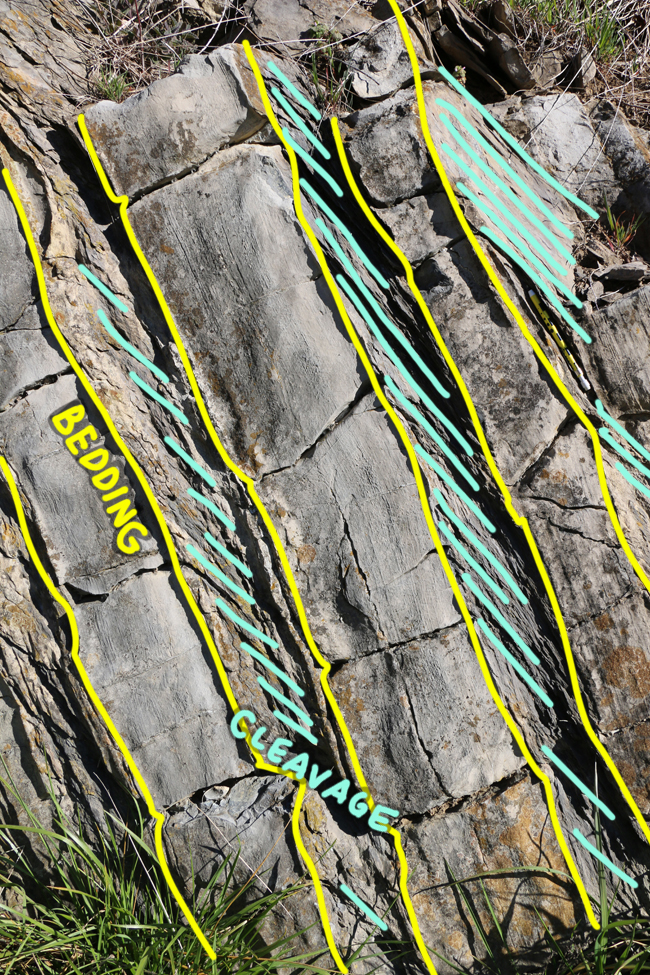

So why are the strata cleaved? When the Alleghanian Orogeny happened, in the Pennsylvanian into the Permian, these strata were squeezed. As a result, then folded, cleaved, and broke. Look at this fine outcrop, for instance:

Now zoom in to the center, to that shadowy realm just above the “cleavage” annotations:

Zooming in more, to the red “close-up” box:

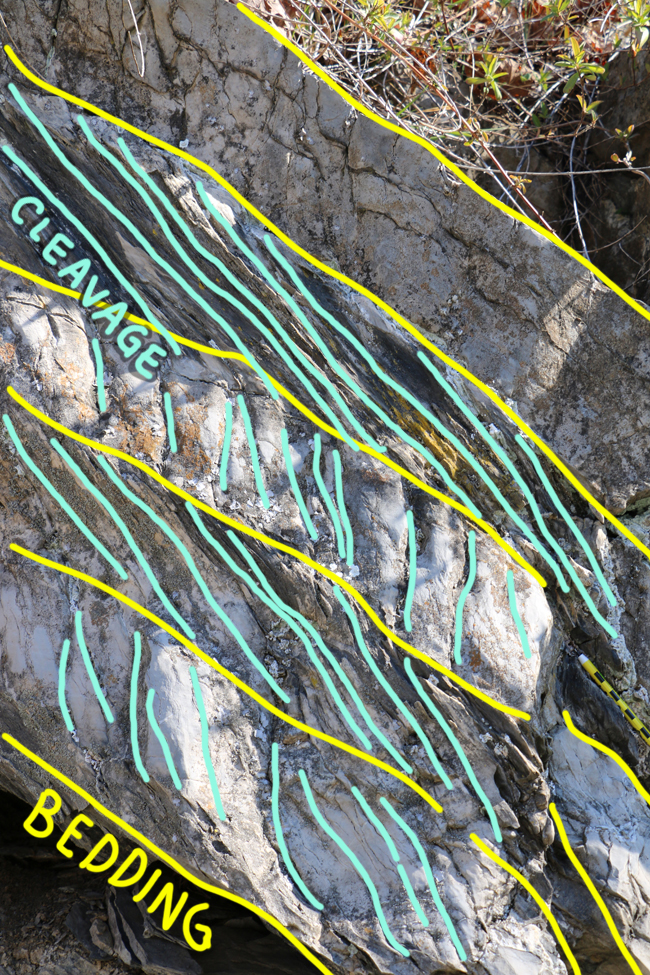

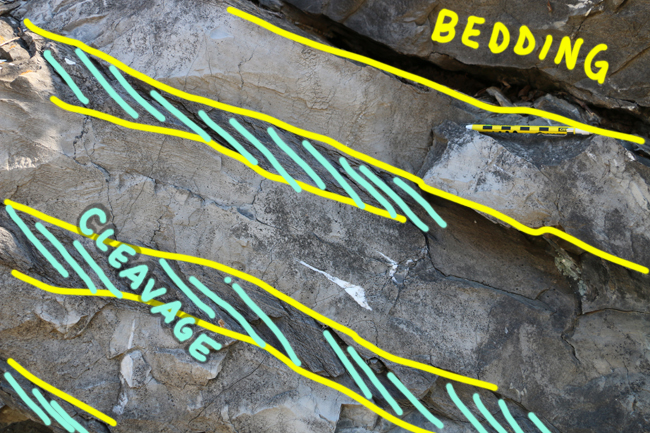

Stylolites and cleavage have a lot in common: both are the result of pressure solution, the propensity of certain minerals like calcite to go into solution when they are put under high pressure. Cleavage is more “distributed” through the body of the rock, while stylolites are “pressure solution localization” seams. Clay minerals help catalyze the dissolution of calcite, so cleavage is much better developed in the shale layers than in the limestones. The cleavage/stylolite swarms “refract” as they cross from one lithology to the next, as these next images show:

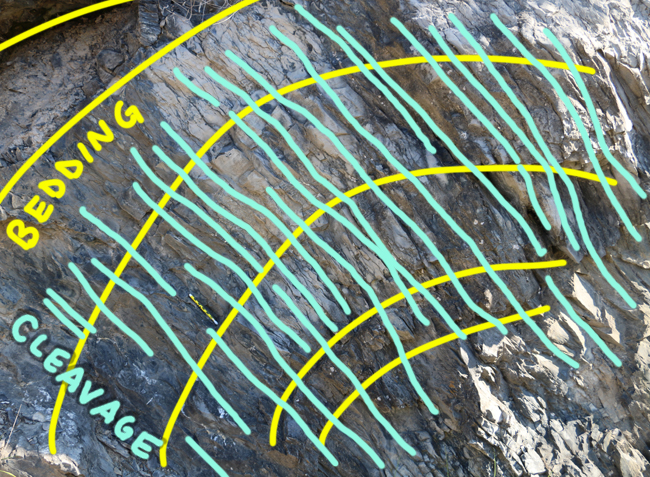

Here’s the crest of another anticline, showing a “fan” of cleavage orientations around it:

The left versus right sides of this fold illustrate an important concept: the relationship of bedding and cleavage in the detection of overturned beds. That brings us back to the image we started off this blog post with:

Here, bedding and cleavage both dip to the right side of the photo, but bedding is steeper (close to vertical) and cleavage is more shallow. That indicates these beds are (ever so slightly) overturned.

In contrast, here too the two planar features both dip to the right, but now cleavage is more steeply inclined, and bedding has the shallower dip. These beds are right-side-up:

Anyhow, zoom in on the core of this fold to see the cleavage:

Though the beds seen here aren’t overturned, the asymmetry of the fold is readily apparent: it’s steeper on the left (west), and more gently-inclined on the right (east).

There was also plenty of boudinage to be seen at the site. The limestone layers broke into sausage-link-shaped chunks and the “necks” between these chunks (boudins) were filled with calcite veins (white) and shale pooching in from neighboring strata.

Zoom in to the lower middle:

Zoom in to the upper right:

Another example, a short distance away, in a more “incipient” stage, but still showing differential weathering concentrated on the fractures that might have evolved into boudin necks:

One more example of boudinage of a limestone layer, showing very brittle deformation in the limestone (shards, surrounded by white calcite veins) , with gentle inflection of neighboring shale:

Let’s close out this post with one more lovely example. Are these beds overturned or not?

Post your answers in the comments if you want credit!

22 March 2017

Pocketknife for scale

Check out this lovely granite:

Pocketknife for scale

Pocketknife for scale

…But there’s a trick!

(Scroll down for the big reveal!)

Check it out:

This is how Crocodile Dundee would say “That’s not a sense of scale… Now that’s a sense of scale!”

10 March 2017

Friday fold: Sheba Mine sample

When touring the geology of the Barberton Greenstone Belt last August, our group visited the Sheba Mine, a gold mine high in the hills. Their geologist kindly showed us around and allowed us to visit his history-laden office. I have no idea where this sample originated, but it was the only fold I saw in the place, nestled between sepia-toned photographs and old lanterns and rusty picks.

I wonder what the layers would have done if there was more to the sample at the bottom edge. Given the way they inflect inward on each limb, it’s a curious shape for a fold.

Happy Friday!

9 March 2017

Lugworm casts on the beach, Islay

Lugworms are marine worms that live as benthic infauna. You’d be lucky to catch a glimpse of one in the flesh, since they spend most their time in the sediment, but you can see their traces if you walk the beach at low tide at Ardnave, in northern Islay, along the western shore of Loch Gruinart.

They look like this, a big extruded pile of mucous-bound sand:

Some still-submerged examples:

Each of these piles of castings consist of sand that was consumed through one end of the worm, had the good stuff digested, and then the undigestible mineral grains were ejected out the back end. In the photo above, note the color differences – this reflects differences in the concentration of heavy minerals in the sand – ilmenite and the like – relative to quartz. The lugworms are little miners, and we can assess their “tailings” (the word takes on new meaning in this context!) if we want a sense of the composition of the sediment just below the surface.

Two more exemplars, exposed above water, with a lens cap for scale:

The whole beach is a landscape of these features. Note the little ‘craters’ adjacent to the piles of casts – these are the holes leading to the “head” end of the worm. They dwell in a U-shaped burrow, with the castings being ejected at the “tail” end. So each pile corresponds to one pit.

There’s a complex concentration of lugworm tubes in this sediment – imagine if the sand were invisible and all you could see was the parabolic trace of these hundreds of U-shaped burrows. It would be an astounding density of upside-down arcs!

But the most important thing about lugworm casts? They are fun to squish underfoot as you run along the shore, as my son discovered!

8 March 2017

Q&A, episode 4

It’s time for a fresh edition of “you ask the questions” here on Mountain Beltway. Anyone can ask a question, serious or spurious, and I’ll do my best to answer it here. Use the handy Google Form to to submit your questions anonymously.

Here’s this week’s question:

6. People often say that 97% of scientists agree on climate change being caused by human activities. Yet people write books and articles refuting this (maybe mostly non-scientists) but they seem to use data and are convinced of their position. Is there a legitimate argument against what these 97% of scientists say and if not, are these people just lying or making it up? Why would they do that? Is there no science to back up their claims?

That’s a great question. It turns out that I know the ideal person to give a clear, detailed answer. The responses below come from John Cook, a research assistant professor at the Center for Climate Change Communication at George Mason University. He founded Skeptical Science, a website which won the 2011 Australian Museum Eureka Prize for the Advancement of Climate Change Knowledge and 2016 Friend of the Planet Award from the National Center for Science Education. John co-authored the college textbooks Climate Change: Examining the Facts with Weber State University professor Daniel Bedford and Climate Change Science: A Modern Synthesis and the book Climate Change Denial: Heads in the Sand. In 2013, he published a paper analyzing the scientific consensus on climate change, a matter of some relevance to the question being asked!

That’s a great question. It turns out that I know the ideal person to give a clear, detailed answer. The responses below come from John Cook, a research assistant professor at the Center for Climate Change Communication at George Mason University. He founded Skeptical Science, a website which won the 2011 Australian Museum Eureka Prize for the Advancement of Climate Change Knowledge and 2016 Friend of the Planet Award from the National Center for Science Education. John co-authored the college textbooks Climate Change: Examining the Facts with Weber State University professor Daniel Bedford and Climate Change Science: A Modern Synthesis and the book Climate Change Denial: Heads in the Sand. In 2013, he published a paper analyzing the scientific consensus on climate change, a matter of some relevance to the question being asked!

I’ve recruited John’s help to get you an authoritative, complete response. Graciously, he agreed to take it on. I attempted to break the reader’s question down into bite-sized chunks that keyed in on the main subtopics that seemed ripe for discussion.

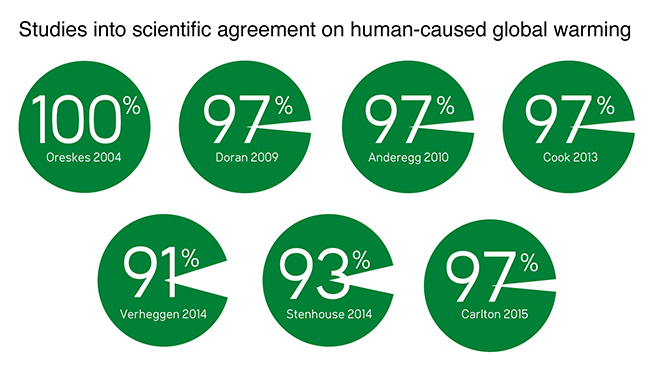

6a) Where does the 97% number come from? Is it from one survey or multiple surveys? Is it among specialists, the general public, or some other population?

A number of studies have found 97% agreement among climate experts that humans are causing global warming. A 2009 survey by Peter Doran and Maggie Zimmerman found that among climate scientists publishing climate research, 97% agreed that humans are significantly raising global temperature. A 2010 analysis of public statements about climate change by William Anderegg found that among the scientists who had published peer-reviewed climate research, 97% agreed that humans are causing most of recent global warming. I was part of a team that published a 2013 analysis of peer-reviewed climate papers – we found that among papers stating a position on human-caused global warming, 97% endorsed the consensus.

There have been other studies measuring the consensus. This motivated us to collaborate with co-authors of seven of the leading consensus studies (the paper is Consensus on consensus: a synthesis of consensus estimates on human-caused global warming). We found two key results. First, agreement on human-caused global warming increases with expertise in climate science. The highest agreement is among climate scientists publishing climate research. Second, among the group of climate experts, there was overwhelming agreement – between 90 to 100% with a number of studies converging on 97%.

Skeptical Science

Skeptical Science

6b) Who are the remaining 3%, then? Can you give a representative example or two?

It’s difficult to give a representative example because there is no coherent story across the contrarian papers and scientists. They often argue positions contradictory with each other (e.g., arguing “climate change isn’t happening” versus “climate change *is* happening but not caused by humans”). This incoherence inspired us to coauthor a paper about the incoherence of climate science denial. Rejection of human-caused global warming isn’t about presenting an alternative, coherent explanation of global warming – it’s about manufacturing doubt in order to decrease public support for climate action.

6c) What data are they (the contrarians) using? Is their data complete? Is their data compelling?

We’ve published several studies examining the data and analysis in contrarian papers. In one study, we show how their methods consistently show methodological flaws and common mistakes. Probably the most common mistake is cherry picking – ignoring any data or research that is inconsistent with their argument. For this reason, contrarian studies are often found to possess significant flaws in subsequent research.

6d) Are they “just lying” or “making it up” or is something else going?

It’s always problematic trying to guess what’s going on under the hood, and judge whether a person is intentionally trying to deceive versus genuinely believing misinformation. Psychological research tells us that this is especially difficult because from the outside, intentional deception looks exactly the same as cognitive biases that cause people to self-deceive. For example, misleading through cherry picking might be intentional, but it might be the product of confirmation bias – people ascribing more weight to evidence that confirms their beliefs. Similarly, appealing to fake experts might be a strategy to cast doubt on the scientific consensus, but people also ascribe more credibility and expertise to people whom they agree with.

6e) What do you interpret their motivations to be?

The biggest driver behind the rejection of climate science isn’t ignorance, stupidity or lack of education. The biggest driver of climate science denial is political affiliation, and the second biggest driver is political ideology. Political beliefs drive people’s views on the human role in climate change.

The reason for this is because supporters of free, unregulated markets dislike that one of the proposed solutions to climate change is regulation of polluting industries. Aversion to a solution leads them to deny that there’s a problem in the first place that needs solving.

It’s crucially important to understand this dynamic as it helps us distinguish between genuine skepticism and science denial. Skepticism is a good thing – a genuine skeptic considers the evidence then comes to a conclusion. A denialist, on the other hand, comes to a conclusion first then denies any evidence that conflicts with that conclusion. And science denial carries certain tell-tale characteristics.

So ideology and tribalism are the key drivers of climate science denial.

6f) Is there any hope of either the 3% or the 97% changing their minds? If so, what conditions might be required to make them re-evaluate their conclusions?

I would say those are two separate questions, given the asymmetry of the situation. For the 97% of climate scientists, the case for human-caused global warming is based on many independent lines of empirical evidence. We see human fingerprints all over our climate, painting a clear, coherent picture. Personally, to change my mind and I’m guessing this is also the case for most of the 97% of climate scientists, it would require showing that not just one line of evidence is false – but many or all of them are false. This is why the consensus on climate change is so robust – overturning a consilience of evidence is incredibly unlikely.

Regarding the 3%, the rejection of climate science is based on factors such as political affiliation or political ideology. It’s an unfortunate psychological reality that people whose views aren’t based on facts and evidence are unlikely to be persuaded by facts and evidence.

However, I would like to offer one ray of hope. Anecdotally, I know of a few people who have changed their mind about climate change and the lightbulb moment that at least began their journey was the realisation that they were being misled by misinformation. I’ve tested this principle in the lab by explaining a technique, or fallacy, of science denial. I found that across the political spectrum, when people were told about the technique that misinformation uses to mislead, they were no longer influenced by the misinformation. No-one, whether they’re conservative or liberal, like being misled. This communication approach, based on a branch of psychological research known as inoculation theory, is something I’m exploring further in my research at the Center for Climate Change Communication.

6g) Anything else you might like to add?

There has been one interesting response from opponents of climate action to the series of studies finding 97% consensus on climate change. One response has been “well, science isn’t done by consensus.” This is interesting because the retort comes from the same people who have been telling us for decades that there is no scientific consensus on climate change. Why tell us there’s no consensus if science isn’t done by consensus?

The long history of consensus denial goes back decades. In the 1990s, political strategist Frank Luntz advised Republicans to cast doubt on consensus in order to delay public support for climate action. This strategy continues to this day. If you’re wanting to delay climate action, it’s a good strategy. Psychologists have identified perceived scientific consensus as a “gateway belief”. The public use expert opinion as a heuristic, or mental shortcut, to guide their views on complicated scientific issues. If they think the experts disagree on climate change, they hold off on supporting climate action.

So it’s important that scientists communicate the high level of agreement on human-caused global warming, in order to address the misconception that scientists disagree. But they should do this with their eyes wide open – communicating the consensus may result in backlash from opponents of climate action. But this underscores the imperative of communicating the consensus – to fail to do so will allow opponents of climate action to continue to misinform the public and erode support for climate policies.

Thanks again to John for taking the time to share his expertise with my readers. If you’re interested in keeping up with his research, I recommend you follow him on Twitter. If you have other questions about science, the Earth system, or anything else, please ask them.

7 March 2017

A conversation with James Barrat

Yesterday I reviewed Our Final Invention, an accessible and provocative book about the development of artificial intelligence (AI) and the various ways it might represent a threat to some or all of the human species, all other forms of life on Earth, and (astonishingly) potentially even the very substance of the planet we dwell on (!). I strongly recommend everyone read it.

Today, I’m please to present a discussion with author James Barrat about AI. Barrat is filmmaker, documentarian and speaker. He’s made movies for organizations like National Geographic, PBS, and the Discovery Channel.

You’re primarily known as a film-maker. What got you so into AI that you felt compelled to write a book about it?

Filmmaking was my introduction to Artificial Intelligence. Many years ago I made a film about AI and interviewed Ray Kurzweil, the inventor and now head of Google’s AI effort, Rodney Brooks, the premier roboticist of our time, and science fiction legend Arthur C. Clarke. Kurzweil and Brooks were casually optimistic about the time to come when we’d share our planet with smarter than human machines. They both thought it’d usher in an era of prosperity and in Kurzweil’s case, prolonged life. Clarke was less optimistic. He said something like this: Humans steer the future not because we’re the strongest or fastest creature but because we’re the smartest. Once machines are smarter than we are, they will steer the future rather than us. That idea stuck with me. I began interviewing AI makers about what could go wrong. There was plenty to run with.

Why write a book rather than make a film? Simply put, there’s too much information in a good discussion of AI for a film. A book is a much better medium for the subject.

On your website, you state that discussing AI is “the most important conversation of our time” – I’m convinced that you’re right, or at least there’s a strong likelihood you’re right. Can you share why you think it’s so important?

In the next decade or two we’ll create machines that are more intelligent than we are, perhaps thousands or millions of times more intelligent. Not emotionally smarter, but better and much faster at logical reasoning and doing the things that add up to what we call intelligence. A brief definition I like is the ability to achieve goals in a variety of novel environments and to learn. They won’t by default be friendly to us, but they’ll be much more powerful. We must learn to control them before we create them, which is a huge challenge. If we fail, and these machines are ambivalent towards us, we will not survive. My book explores these risks.

It’s an excellent book, and I think it’s better than Nick Bostrom’s Superintelligence as an accessible entry into the topic. Thank you for writing it. How does AI rank in your estimation relative to the threats such as nuclear war, human overpopulation, growing economic disparity, climate change, or destabilization of ecosystem “services”?

I think AI Risk is coming at us faster, and with more devastating potential consequences, than any other risk, including climate change. We’re fast-tracking the creation of machines thousands or millions of times more intelligent than we are, and we only have a vague sense about how to control them. It’s analogous to the birth of nuclear fission. In the 20s and 30s fission was thought of as a way to split the atom and get free energy. What happened instead was we weaponized it, and used it against other people. Then we as a species kept a gun pointed at our heads for fifty years with the nuclear arms race. Artificial Intelligence is a more sensitive technology than fission and requires more care. It’s the technology that invents technology. It’s in its honeymoon phase right now, when its creators shower us with useful and interesting products. But the weaponization is already happening – autonomous battlefield robots and drones are in the labs, and intelligent malware is on its way. We’re at the start of a very dangerous intelligence arms race.

Yeah, the discussion of Stuxnet in your book was sobering. I honestly think I’m going to start stockpiling food as a result of reading it. But there’s a huge disconnect between my reaction and the general populace. Why aren’t more people talking about it? The absence of a widespread public discussion about AI seems bizarre to me.

I wrote Our Final Invention to address the lack of public discourse about AI’s downside. I wrote it for a wide general audience with no technical background. I agree, we’re woefully blind to the risks of AI, but it will impact all of us, not just the techno-elite. Each person needs to get up to speed about AI’s promise and peril.

As I wrote in the book, I think movies have inoculated us from real concern about AI. We’ve seen how when pitted against AI and robots, humans win in the end. We’ve had a lot of movie fun with AI, so we think the peril is under control. But real life surprises us when it doesn’t work like Hollywood.

I thought Ex Machina addressed that reasonably well. It shows a reclusive Silicon Valley-type engineer building AI in his secret redoubt, but that “lone wolf” is kind of different from real-world economics.

I thought Ex Machina addressed that reasonably well. It shows a reclusive Silicon Valley-type engineer building AI in his secret redoubt, but that “lone wolf” is kind of different from real-world economics.

Can you briefly discuss the motivations of the various academic, commercial, and military groups who are pursuing AI? Do we know who they all are? (If not, why would they be motivated to be secretive?)

A huge economic wind propels the development of AI, robotics, and automation. Economically speaking, this is the century of AI. Investment in AI has doubled in every year since 2009. McKinsey and Co, a management consulting group, anticipates AI and automation will create between 10 and 25 trillion dollars of value by 2025. That almost ensures it’ll be developed with haste, not stewardship. As for the military, they don’t have the best programmers, which have gone to Google, Amazon, Facebook, IBM, Baidu, and others, but they have deep pockets. They’ll develop dangerous weapons and intelligent malware.

And yes, there are a lot of stealth companies out there developing AI. Stealth companies operate in secret to protect intellectual property. Investor Peter Thiel just unveiled one of his stealth companies which, it turns out, helped the NSA spy on us and subvert the Constitution.

Sheesh. But they’re not close, right? I mean: How close do you think “we” (i.e., one of these groups) are to making a true artificial general intelligence (AGI)?

That’s a tough question, and no one can get it right. That said, several years ago I polled some AI makers, and the mean date was 2045. A small number said 2100, and a smaller number said never. I think the recent advances in neural networks have moved AGI closer. I agree with Ray Kurzweil – about 2030, but it might be as early as 2025.

AGI will likely be the product of machines that program better than humans. And shortly after we create AGI, or machines roughly as intelligent as humans, we’ll create superintelligent machines vastly more intelligent than we are.

2030 is 13 years from the moment we’re currently discussing this – about a third of my own age. That’s really, really soon, considering how little preparation has been laid down. If we knew aliens were going to land on Earth 13 years from now, I suspect we’d be frantically preparing.

So based on your research and interviews, would you care to venture how much time will elapse between the achievement of AGI and the evolution of artificial superintelligence (ASI?) A “hard takeoff” seems like a most chilling scenario. But is it realistic?

I think the achievement of AGI will require all the tools necessary for ASI. The concept of an intelligence explosion, as described by the late I.J. Good, is worth exploring here. Basically, the tools we use to create AGI will probably include self-programming software, and the end goal of self-programming software will be software that can develop artificial intelligence applications better than humans can. I wrote a whole section in the book about the economic incentives for creating better, more reliable, machine-produced software. It’s fairly easy to see this capacity growing out of neural networks and reinforcement learning systems.

Once you’ve got the basic ingredients for an intelligence explosion, AGI is a landmark, not a stepping stone. We will probably pass it at a thousand miles an hour.

That’s sobering. What’s happened that’s relevant to understanding AI since the 2013 publication of Our Final Invention?

I think everyone thinking and reading about AI was surprised by how quickly deep learning (advanced neural networks) have come to dominate the landscape, and how incredibly useful and powerful they’ve turned out to be. When I was writing Our Final Invention, AI-maker Ben Goertzel spoke about the need for a technical breakthrough in AI. Deep Learning seems to be it.

Are there any tangible actions that concerned citizens should consider taking to protect themselves? Or is it just a case of crossing our fingers and hoping for the best?

Because it impacts everyone, everyone should get up to speed about AI and its potential perils. If enough people are informed, they’ll force businesses to develop AI with safety and ethics in mind, and not just profit and military benefit. And they’ll vote for measures that promote AI stewardship. But the clock is ticking and the field is advancing incredibly rapidly, and behind closed doors. The world would’ve been better off had nuclear fission not been developed in secret.

What are you working on next? Can we expect you to be a voice of informed commentary on AI in the future? Who else should people be paying attention to if they want to stay on top of this issue?

I recently finished a PBS film called Spillover and am developing a couple of other films right now. I’m speaking about AI issues to corporations and colleges, which I enjoy and take seriously as part of my mandate to get the word out. There is a dearth of good speakers about AI Risk. I’m thinking about another book about AI, and about making my book into a documentary film, but as I said, I’m a little skeptical about a film’s power to influence this issue. For more information I suggest looking up organizations such as the Machine Intelligence Research Institute and The Future of Life Institute.

Thank you, James…

… To be continued… I hope.

6 March 2017

Our Final Invention, by James Barrat

I am concerned about artificial general intelligence (AGI) and its likely rapid successor, artificial superintelligence (ASI). I have written here previously about that topic, after reading Nick Bostrom’s book Superintelligence. I have just finished another book on that topic, Our Final Invention, by James Barrat. I think it’s actually a better introduction to the topic than Bostrom, because it’s written in a more journalistic, less academic style. Most chapters read like a piece you might find in WIRED or the New Yorker. Attention is given to the personalities of the experts being interviewed, the quirky historical backstories, the setting where the interview takes place. It’s more people-centered, in other words – something important to remind you of what you value as a human, considering the rest of the content discussed. It’s more approachable. In addition, I valued reading it because Barrat is also more focused on the urgency of the risk of artificial intelligence (AI), whereas that’s only a part of Bostrum’s purview. Bostrum spends time on things like the rights of superhuman intelligences, but Barrat’s more focused on how hard it’s going to be to control them.

I am concerned about artificial general intelligence (AGI) and its likely rapid successor, artificial superintelligence (ASI). I have written here previously about that topic, after reading Nick Bostrom’s book Superintelligence. I have just finished another book on that topic, Our Final Invention, by James Barrat. I think it’s actually a better introduction to the topic than Bostrom, because it’s written in a more journalistic, less academic style. Most chapters read like a piece you might find in WIRED or the New Yorker. Attention is given to the personalities of the experts being interviewed, the quirky historical backstories, the setting where the interview takes place. It’s more people-centered, in other words – something important to remind you of what you value as a human, considering the rest of the content discussed. It’s more approachable. In addition, I valued reading it because Barrat is also more focused on the urgency of the risk of artificial intelligence (AI), whereas that’s only a part of Bostrum’s purview. Bostrum spends time on things like the rights of superhuman intelligences, but Barrat’s more focused on how hard it’s going to be to control them.

So if you haven’t given any thought to AI, I strongly encourage you to do so. It represents an economic force that will be deeply unsettling to civilization even if its deployment goes absolutely perfectly. And it is a topic that is of vital interest to humanity if it goes even slightly wrong. Multiple groups working for our government (defense, mainly), foreign governments, private industry and academia are working very, very hard rich now to achieve artificial intelligence, and one of them is going to get there first. One of the greatest “take-aways” I got from Barrat’s book is how vital that “first mover” motivation is. There is a super strong strategic advantage to whoever achieves AGI first – and therefore a tremendous economic / military incentive to race to be the first. It dis-incentivizes the different players from trusting one another, sharing information, or coordinating their strategies for safety. It’s like developing the atom bomb, except that private companies beholden to their shareholders are also in the race, and the atom bomb starts acting on its own once it exists. Will Google win? Will DARPA? Will someone else? How much do we trust that winner to have put appropriate safeguards in place? How much do we trust them to wield their new superpower appropriately? This really matters. One of them will get there first, and it could be in 20 years, or it could be tomorrow morning.

Because of recursive self-improvement, the first AGI has a strong likelihood of becoming the first ASI, and if that happens we will be in a truly unprecedented situation – suddenly humanity will be communicating with, bargaining with, and evaluating the trustworthiness of something that is both smarter than us, faster thinking than us, and also totally alien. Its intelligence will not be the result of millions of years of hunting and gathering on the savanna. It will not think like we think. It will be goal-seeking, powerful in ways we are not, and it will not share our values. It can copy itself, making multiple versions that may collaborate, widening the odds still further. Right now, a huge percentage of stock trading on modern Wall Street is conducted by “narrow” AI over the course of milliseconds. An AGI or ASI with access to those algorithms could make a lot of money in order to further its goals. If the AI cannot control a critical piece of hardware it needs to accomplish its goals, it might quickly spin up a fortune with which to entice a human accomplice or patsy. An ASI in search of more resources might decide to leave this planet and make a ship to do so. It might make self-replicating ships that turn asteroids into mines and factories and thus into new ships bearing a clone of the programming of the ASI. These hypothetical ships could travel space with far less risk than a human astronaut could, with none of the issues of radiation poisoning, need for nutrition, or cabin fever. Once ships like these depart Earth, we won’t be able to catch them. I know it sounds hyperbolic, but I don’t see a broken link in the chain of reasoning where it’s implausible or unlikely that the first ASI would take over the galaxy. As far I can sensibly tell, this actually isn’t crazy talk. Could the stakes be any higher?

We have a situation where multiple groups of smart people — some we know about, some we don’t — are working on building a thing that will be as smart as us or smarter. Sooner or later, one of them will succeed. That success might be managed well, in which case its creators could accrue some serious benefits, which they may or may not share with a wider swath of humanity. If it is not managed well, there will be negative consequences that might range from small disasters to large catastrophes. And the odds are that it won’t be managed perfectly. How bad will the mismanagement be? The tool being mismanaged has unprecedented power, so what would be a small goof-up under the normal circumstances of deploying novel technology (e.g., the inadvertant combustibility of the Samsung Galaxy Note 7) suddenly carries much higher stakes.

Barrat spends time in the penultimate chapter documenting Stuxnet, a malware “worm” developed by US and Israeli intelligence which (a) probably succeeded in delaying Iran’s nuclear weapons program by a couple of years, but (b) is now being traded on the black market. It has the ability to zero in on a key piece of software controlling a physical component (uranium-enriching centrifuges, in Iran’s case) and break them. Barrat points out several relevant lessons to be drawn from the episode: (1) targeted software can compromise physical hardware with real-world consequences, (2) Stuxnet was programmed to seek out the centrifuges but ignore other components as part of its strategy of stealth, but the centrifuge-operating software “target” could be swapped out for something vital to a different system, like our increasingly-integrated “smart grid” of power supply, and (3) we didn’t do a very good job controlling Stuxnet, and that’s when the only competitors were mere humans. In reading Barrat’s depiction of the consequences of a sustained power outage on our nation (when there’s no electricity to run the fuel pumps at the gas stations, the trucks don’t run, which means groceries don’t get delivered, and people starve), I was surprised at the realization of how vulnerable I really am.

I encourage you to read Barrat’s first chapter. I can’t see how anyone reading the near-future parable of “the Busy Child” wouldn’t be motivated to read the rest of the book – and then Bostrom’s. As a society, we need to be talking about this. It needs to be high in society’s hierarchy of concerns, alongside ecosystem coherence and resilience, emerging diseases, antibiotic resistance, large asteroid/comet impacts, and climate change. We are not preparing the way we should be, and I think that’s largely because most people are unaware how close this threat really looms.

Please take the time to familiarize yourself with the basics of AI. I want to be able to talk about it with you without sounding like an alarmist loon. The best place to start would be Barrat’s book*.

______________________________________________________

* If you don’t have time to read a whole book, I’ll bet you have half an hour to invest in these two excellent blog posts by Tim Urban: The AI Revolution: The Road to Superintelligence, and The AI Revolution: Our Immortality or Extinction. or watch Sam Harris’s TED talk on the topic.

3 March 2017

Friday fold: Kink folded Dalradian metasediments

Let’s reminisce back to the Walls Boundary Fault on the Ollaberry Peninsula of Shetland today. Here’s a 3D model to go along with the ones I posted last time:

It’s a little ragged, but so am I at the end of the workweek! Happy Friday. Have fun spinning this thing.

2 March 2017

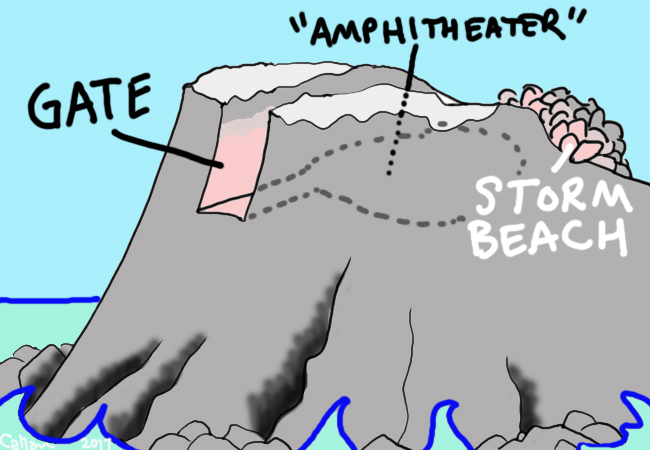

A virtual field trip to the Grind of the Navir

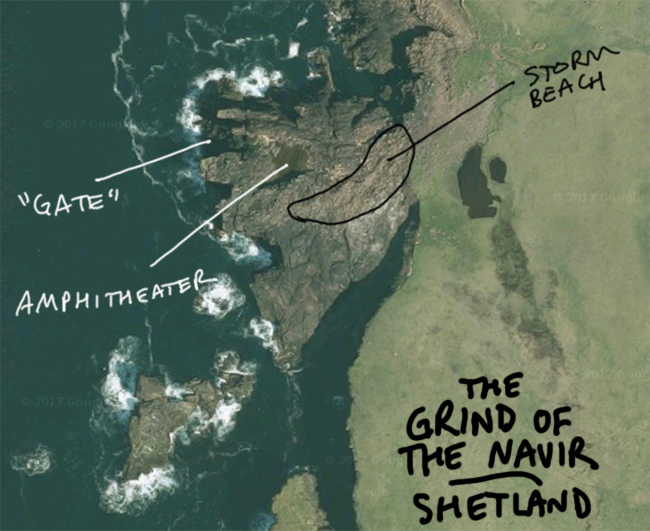

One of the coolest places I visited last summer in Shetland was also one I’d never heard of, the Grind o‘ da Navir, or Grind of the Navir, which translates to “The Gateway of the Borer.” It’s north of Eshaness, on the northwest coast of Mainland.

Perched high up a cliff with a “ramp”-like snout projecting into the Atlantic, the Grind of the Navir is an amphitheater-like flat area that’s been gouged out by storm waves that enter through a prominent slot-like “gate.” The gate is flanked by two prominent highpoints, the “gateposts” or “bastions.” During storms, waves roar between them, scouring the rock, a rosy pink Devonian ignimbrite, knocking out slabs along joints and the bedding plane. The resulting boulders, liberated from the bedrock, pile up at the back of the amphitheater. The pile represents a sedimentary deposit from the waves, and hence it is a “beach” in the strictest sense, but unlike any beach you’ve seen, considering it’s made of nothing but huge blocky boulders, and they are perched 15 meters above sea level!

Here’s a cartoon sketch of the set-up:

Here is an annotated Google Maps screenshot showing the “bird’s eye” view:

And here’s what it looks like when you visit…

Inside the “Ampitheater,” looking toward the “Gate”:

Jointed ignimbrite:

Fresh (pink/lavender) ignimbrite, and weathered (black) ignimbrite; this lets you figure out where boulders were most recently extracted:

A slab of ignimbrite, show eutaxitic lenses and other chunky ingredients:

The “beach”:

Geotours Shetland guide Allen Fraser atop one of the “gateposts,” the South Bastion:

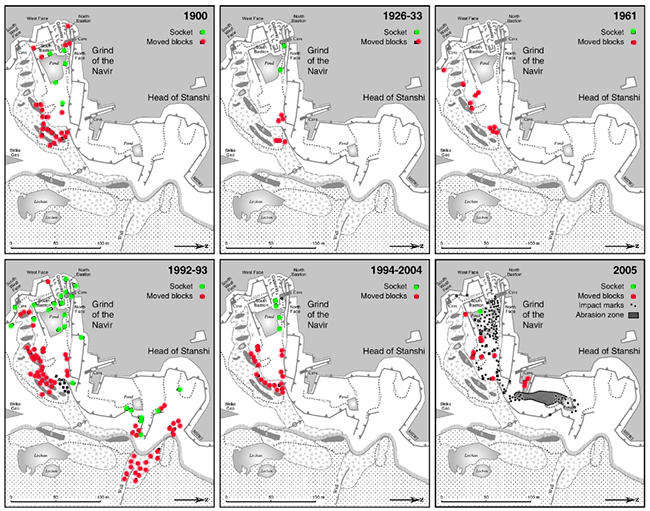

Hall, et al. (2008) published a fascinating study of the Grind, including this series of maps showing boulder movements over more than a century of time. I’ve color-coded it to show “sockets” (fresh pink semi-cubes where blocks of ignimbrite have been liberated) as green dots and moved boulders as red dots:

(Their figure 5)

(Their figure 5)

Those dots show: This is a dynamic place!

See some other photos and some video of the storms at this website.

I made six GigaPans and three 360° spherical photos at the Grind. We’re trying out a new system for embedding them in these blog posts, without the use of Flash. But ironically, they don’t load up well for me, though hitting the “circle arrow” refresh icon seems to force them to load. I’ve included both Non-flash and Flash versions here. Hopefully one will work for you! Explore them (zoom in, go up/down, left/right) here:

A view of the Grind from the grassy marine terrace of the main part of the island:

Link 1.36 Gpx GigaPan by Callan Bentley

A little closer in, with a good perspective on the storm beach with the “gatepost” bastions just peeking over it:

Link 0.08 Gpx handheld GigaPan by Callan Bentley

A view of the amphitheater from inside, looking toward the “gateway” flanked by the two bastions:

Link 1.29 Gpx GigaPan by Callan Bentley

A view from the South Bastion “gatepost” out over the amphitheater toward the storm beach:

Link 0.06 Gpx handheld GigaPan by Callan Bentley

A look at joint control of boulder erosion (consider color):

Link 0.09 Gpx handheld GigaPan by Callan Bentley

A boulder of ignimbrite so you volcanologists can examine its petrology:

Link 0.45 Gpx GigaPan by Callan Bentley

360° spherical photo in the gateway:

Grind of the Navir, Mainland, Shetland #theta360 – Spherical Image – RICOH THETA

360° spherical photo in the amphitheater:

Grind of the Navir, Mainland, Shetland #theta360 – Spherical Image – RICOH THETA

360° spherical photo atop the South Bastion (with Allen Fraser in the background):

Grind of the Navir, Mainland, Shetland #theta360 – Spherical Image – RICOH THETA

This is a fairly extraordinary place in my decades of geological experience. It’s visually striking both in form and color, perched above the sea like a medieval rampart. I felt like a Tolkien character in its imposing setting. And then to contemplate the astonishing forces that would have etched out this hollow so high above the surf – why, it came close to blowing my wee little mind. Later in the trip, I saw some other examples of the same process, but none was so statuesque as the Grind of the Navir.

___________________________________

CITATIONS

Callan Bentley is Associate Professor of Geology at Piedmont Virginia Community College in Charlottesville, Virginia. He is a Fellow of the Geological Society of America. For his work on this blog, the National Association of Geoscience Teachers recognized him with the James Shea Award. He has also won the Outstanding Faculty Award from the State Council on Higher Education in Virginia, and the Biggs Award for Excellence in Geoscience Teaching from the Geoscience Education Division of the Geological Society of America. In previous years, Callan served as a contributing editor at EARTH magazine, President of the Geological Society of Washington and President the Geo2YC division of NAGT.

Callan Bentley is Associate Professor of Geology at Piedmont Virginia Community College in Charlottesville, Virginia. He is a Fellow of the Geological Society of America. For his work on this blog, the National Association of Geoscience Teachers recognized him with the James Shea Award. He has also won the Outstanding Faculty Award from the State Council on Higher Education in Virginia, and the Biggs Award for Excellence in Geoscience Teaching from the Geoscience Education Division of the Geological Society of America. In previous years, Callan served as a contributing editor at EARTH magazine, President of the Geological Society of Washington and President the Geo2YC division of NAGT.